Abstract

In this paper, we analyze the robustness of recent 3D shape descriptors to SO(3) rotations, something that is fundamental to shape modeling. Specifically, we formulate the task of rotated 3D object instance detection. To do so, we consider a database of 3D indoor scenes, where objects occur in different orientations. We benchmark different methods for feature extraction and classification in the context of this task. We systematically contrast different choices in a variety of experimental settings investigating the impact on the performance of different rotation distributions, different degrees of partial observations on the object, and the different levels of difficulty of negative pairs. Our study, on a synthetic dataset of 3D scenes where objects instances occur in different orientations, reveals that deep learning-based rotation invariant methods are effective for relatively easy settings with easy-to-distinguish pairs. However, their performance decreases significantly when the difference in rotations on the input pair is large, or when the degree of observation of input objects is reduced, or the difficulty level of input pair is increased. Finally, we connect feature encodings designed for rotation-invariant methods to 3D geometry that enable them to acquire the property of rotation invariance.

Methodology

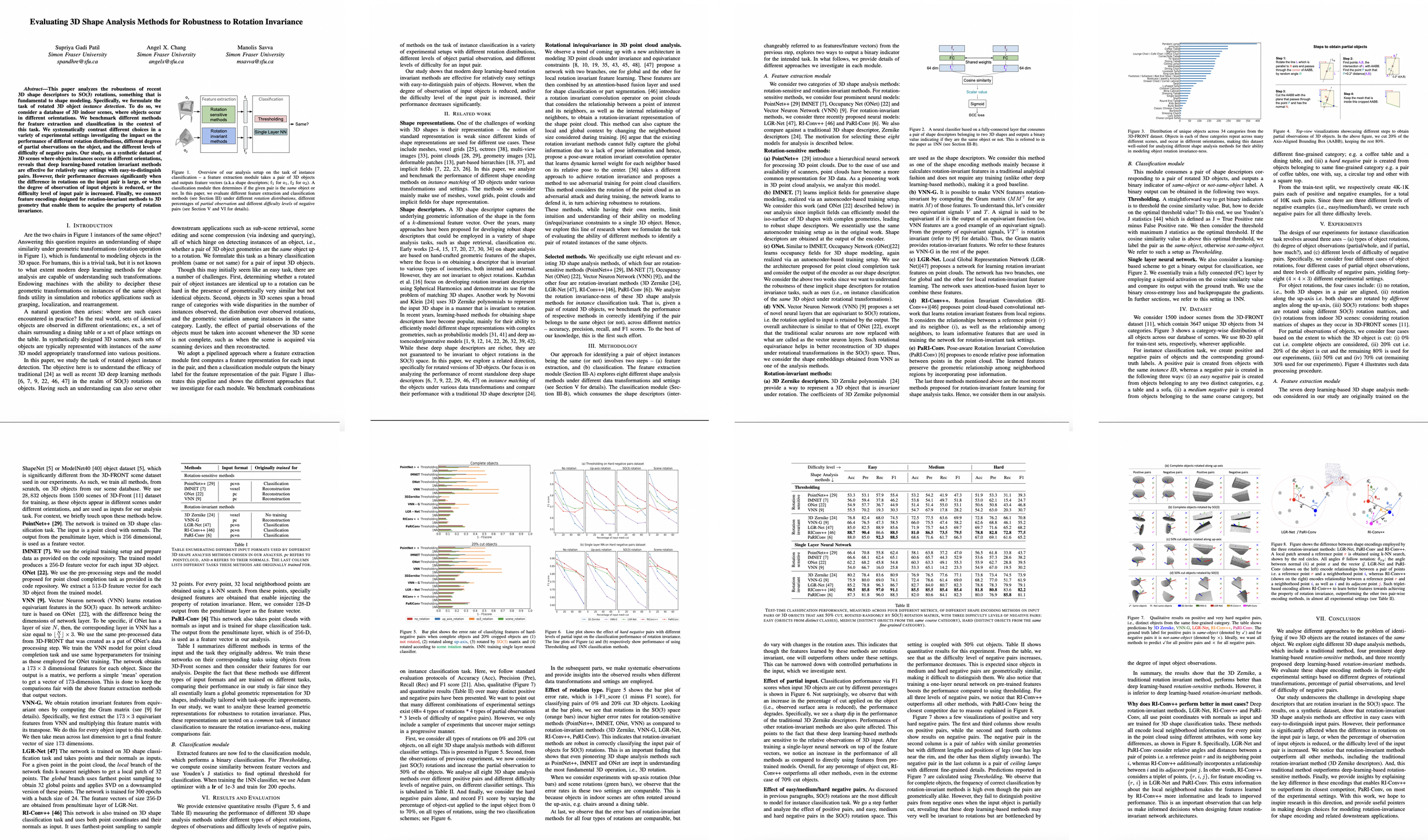

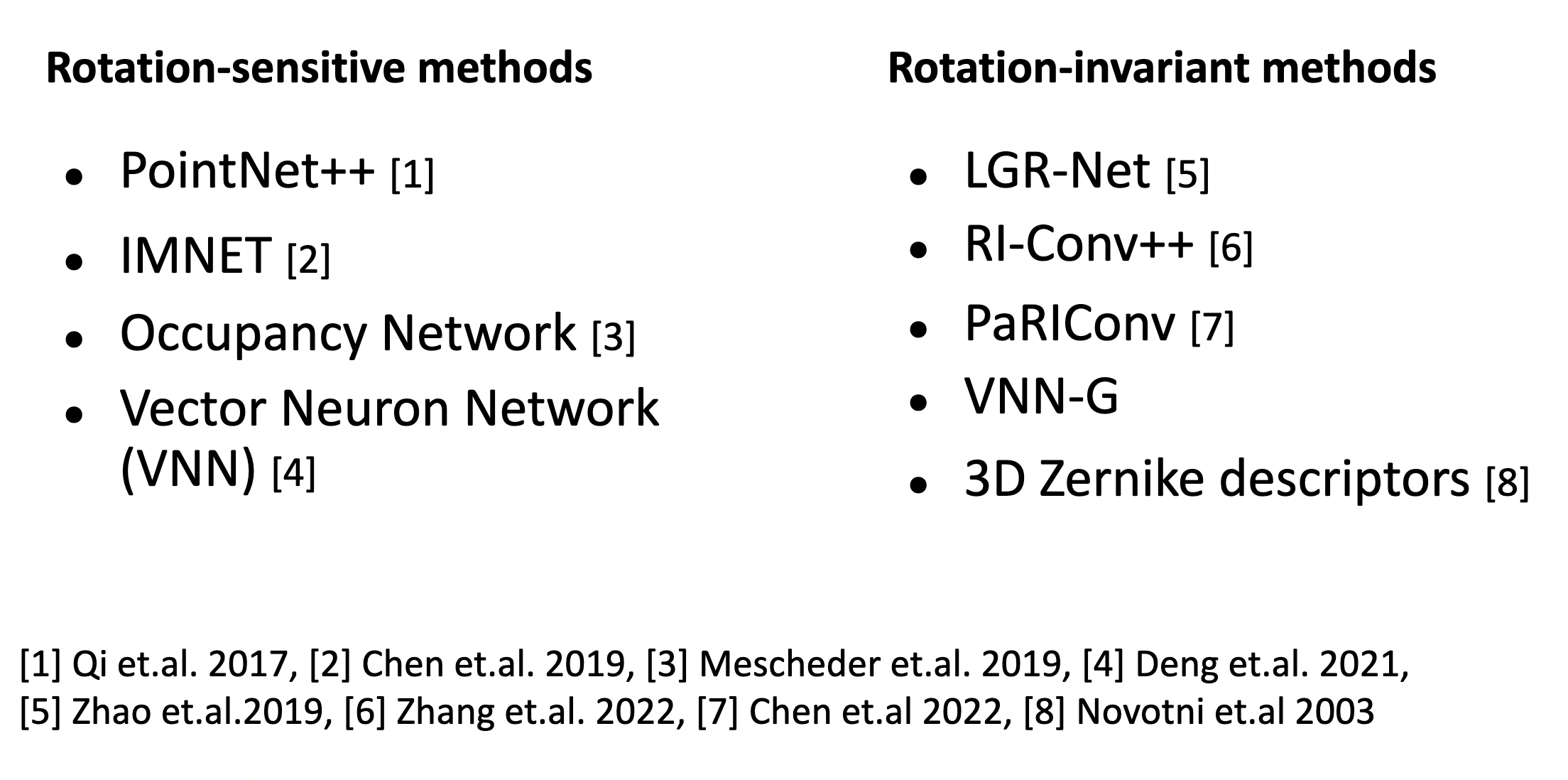

Our approach for identifying a pair of object instances being the same (or not) involves two steps – (a) feature extraction, and (b) classification. The feature extraction module explores eight different shape analysis methods. The classification module, which takes in the feature vectors from the previous step, explores two ways to output a binary indicator for the proposed instance classification task.

Feature Extraction Module

We consider two categories of 3D shape analysis methods: rotation-sensitive and rotation-invariant methods.

Classification module

This module consumes a pair of shape descriptors corresponding to a pair of rotated 3D objects and outputs a binary indicator of 'same-object' or 'not-same-object' label. A binary output can be obtained in the following two ways.

Experiments

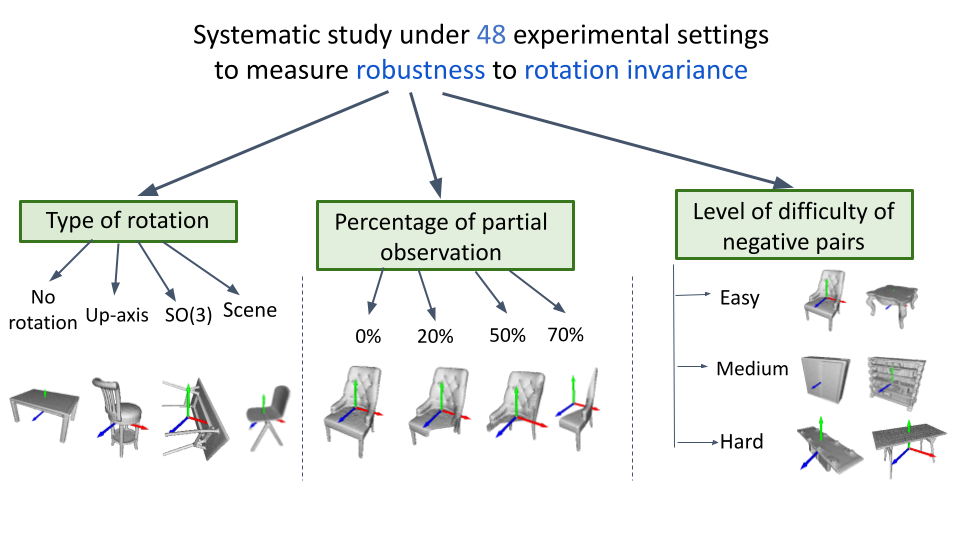

The design of our experiments for instance classification task revolves around three axes – (a) types of object rotations, (b) degree of object observations (partial/whole, and if partial, how much?), and (c) different levels of difficulty of negative pairs. Specifically, we consider four different cases of object rotations, four different cases of partial object observations, and three levels of difficulty of negative pairs, yielding forty-eight (4 × 4 × 3) different experimental settings.

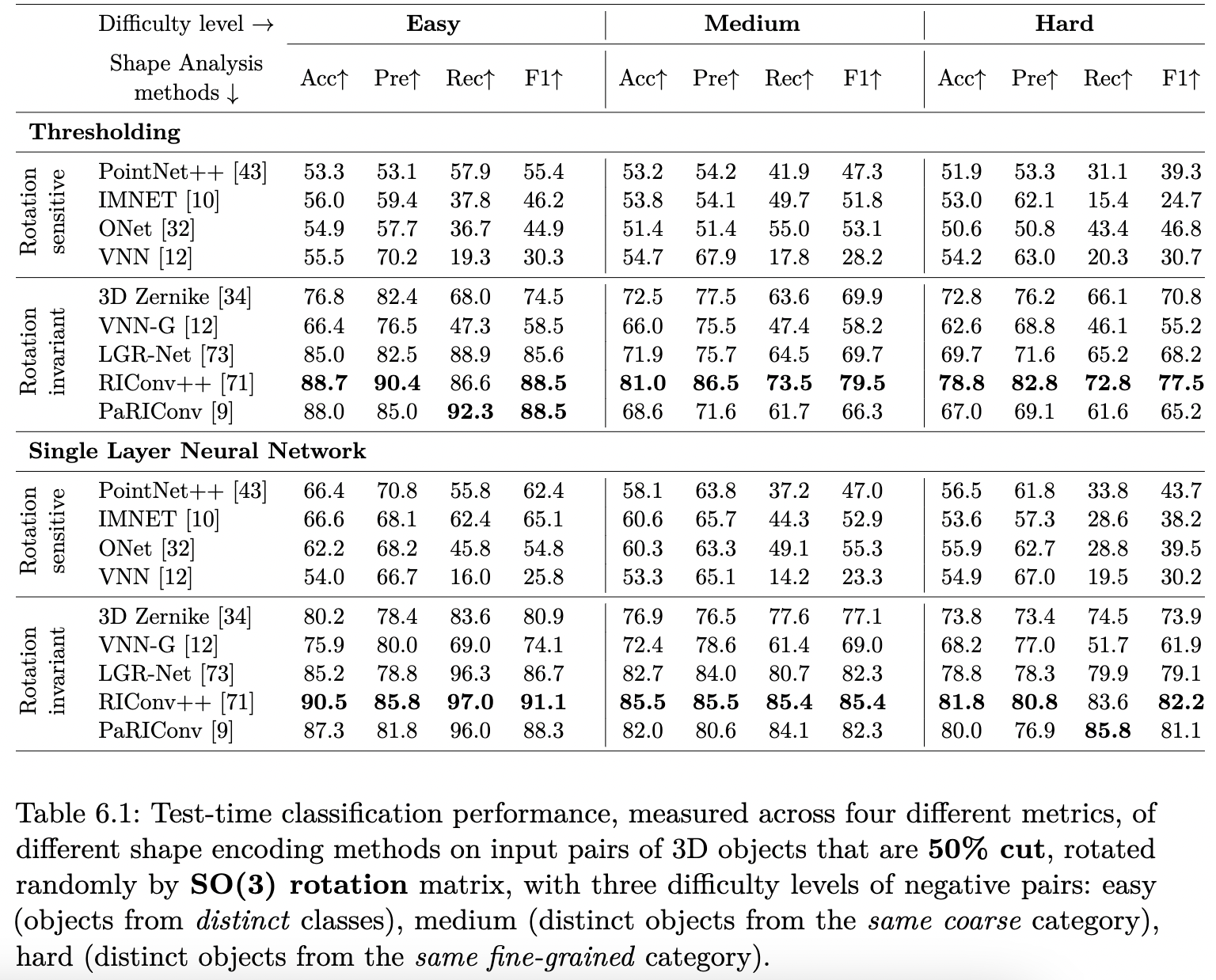

Results

We measure the performance of different 3D shape analysis methods under different types of object rotations, degrees of observations and difficulty levels of negative pairs, on instance classification task. Below table shows effect of negative pairs. Please see our paper for more results on other experimental settings.